Interactive Holographic Display System (IHDS)

by Lyuxing He

Overview

The main purpose of the interactive holographic display system (IHDS) is to develop a visualization tool that facilitates education.

It’s common sense that seeing visualized examples and interacting with them is one of the most effective approaches to learning. For example, it’s easy to understand what is classic Newton’s Second Laws by demonstrating the motions of objects under external forces. However, demonstrating obscure concepts that are hard to see or even do not exist in real life is much harder. To help students understand these obscure concepts, IHDS aims to provide students with an interactive visualization platform that visualizes electron density volumes of different chemical molecules and enables them to play around with them with their bare hands in an intuitive way. Professors can also use this platform during lecture delivery to make the teaching materials even more comprehensive.

The Volumetric Model

The input to IHDS is a text file containing a specific chemical’s electron density raw data, and a volumetric model that can be rendered through Unity in 3D is constructed accordingly.

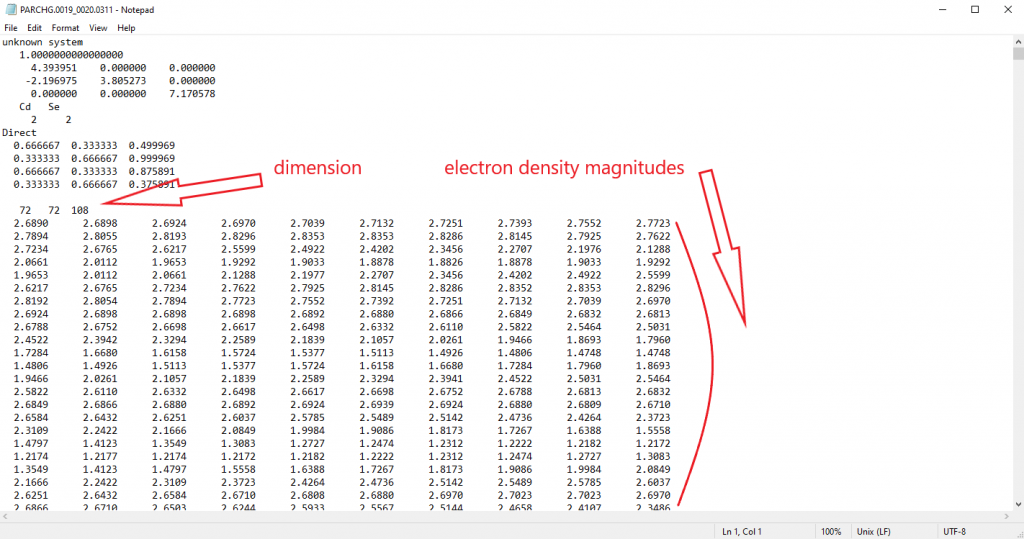

- The Raw Dataset

The electron density to be rendered in IHDS is originally exported from VESTA, a software widely used by students and faculty as a 3D visualization program for structural models and volumetric data. Here we use a VASP output file of a specific material’s partial electron density distribution, the PARCHG file, which contains all the necessary information needed for rendering purposes in a fixed structure. The first few lines provide an overview of the atomic geometries and are not needed for this example. The first piece of information pertaining to rendering appears in a line that only contains three numbers, each representing X, Y, and Z dimensions of the electron density volume, respectively. The following lines are the raw dataset of the electron density volume, with the first value representing (0, 0, 0), the second value representing (0, 0, 1), the third value representing (0, 0, 2), and so on (in XYZ coordinate).

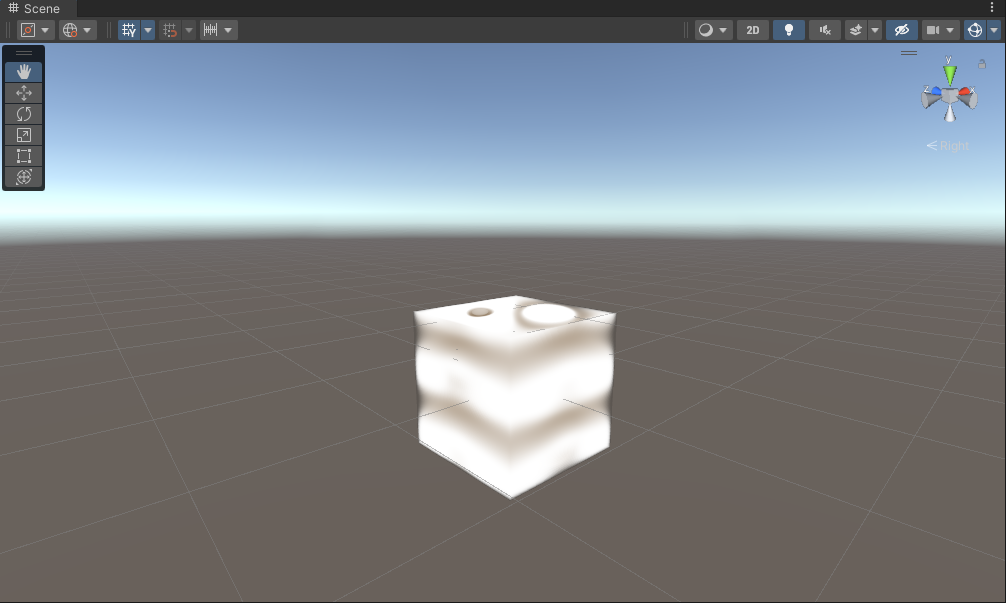

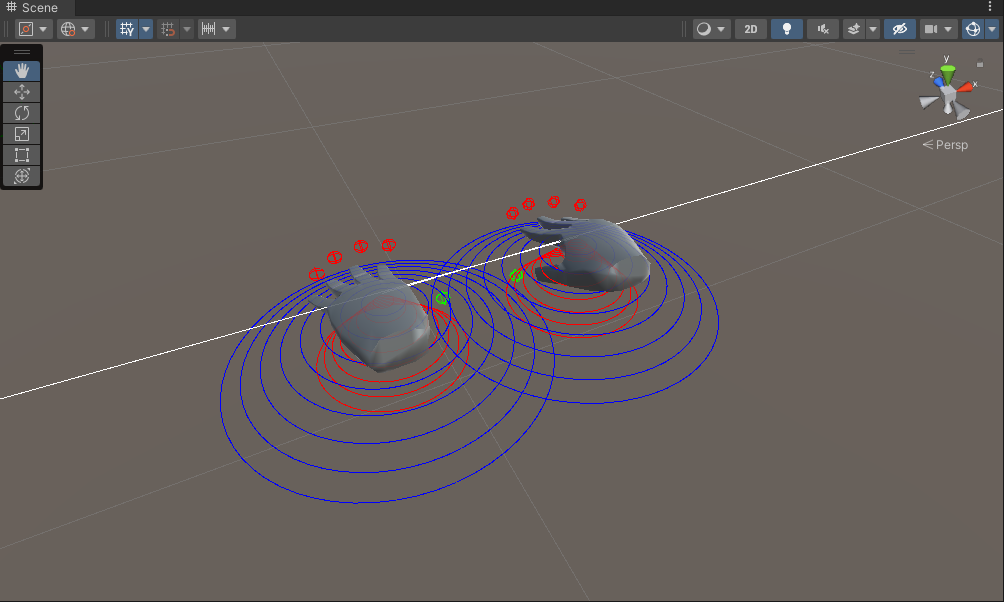

- The Unity GameObject

In Unity, the dataset importer I created will extract all necessary information (dimensionality, raw data, etc.) in the VASP file and pack them into a VolumeDataset instance, which is then used by the VolumeRenderedObject class to generate texture pixel by pixel based on the magnitude of electron density of each coordinate from the raw dataset and produce a rendered game object VolumeRenderedObject_ in Unity world. The transfer function that is used to sweep isosurface value is pre-written and can be accessed via the VolumeRenderedObject class as well.

The Hologram

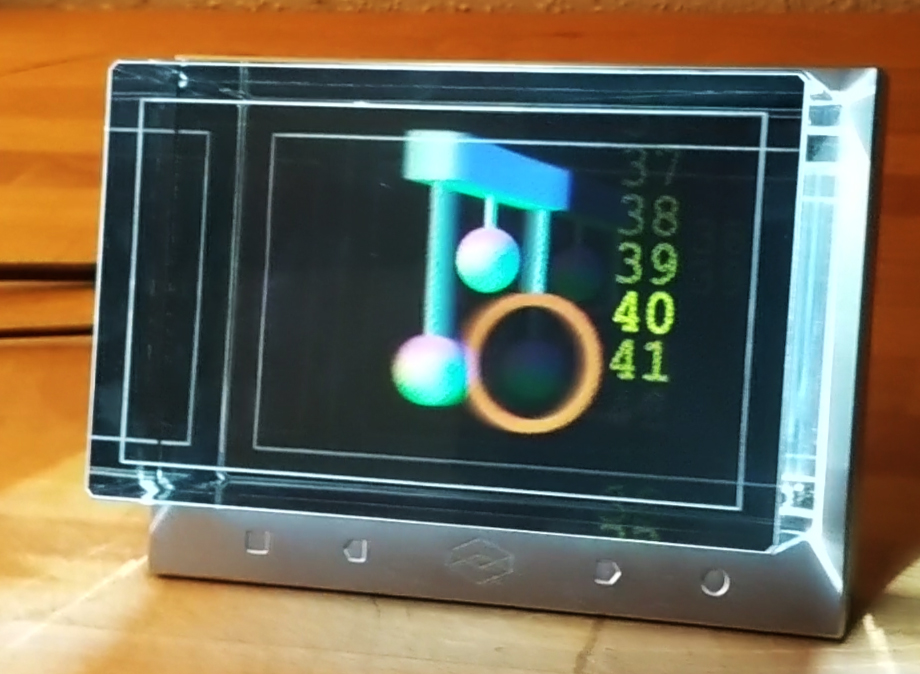

A hologram is a virtual 3D image that can be viewed at different angles. The IHDS uses the Looking Glass as a hologram rendering platform. The hologram rendered by IHDS is constructed based on two principles: multi-viewability and paradox with blur.

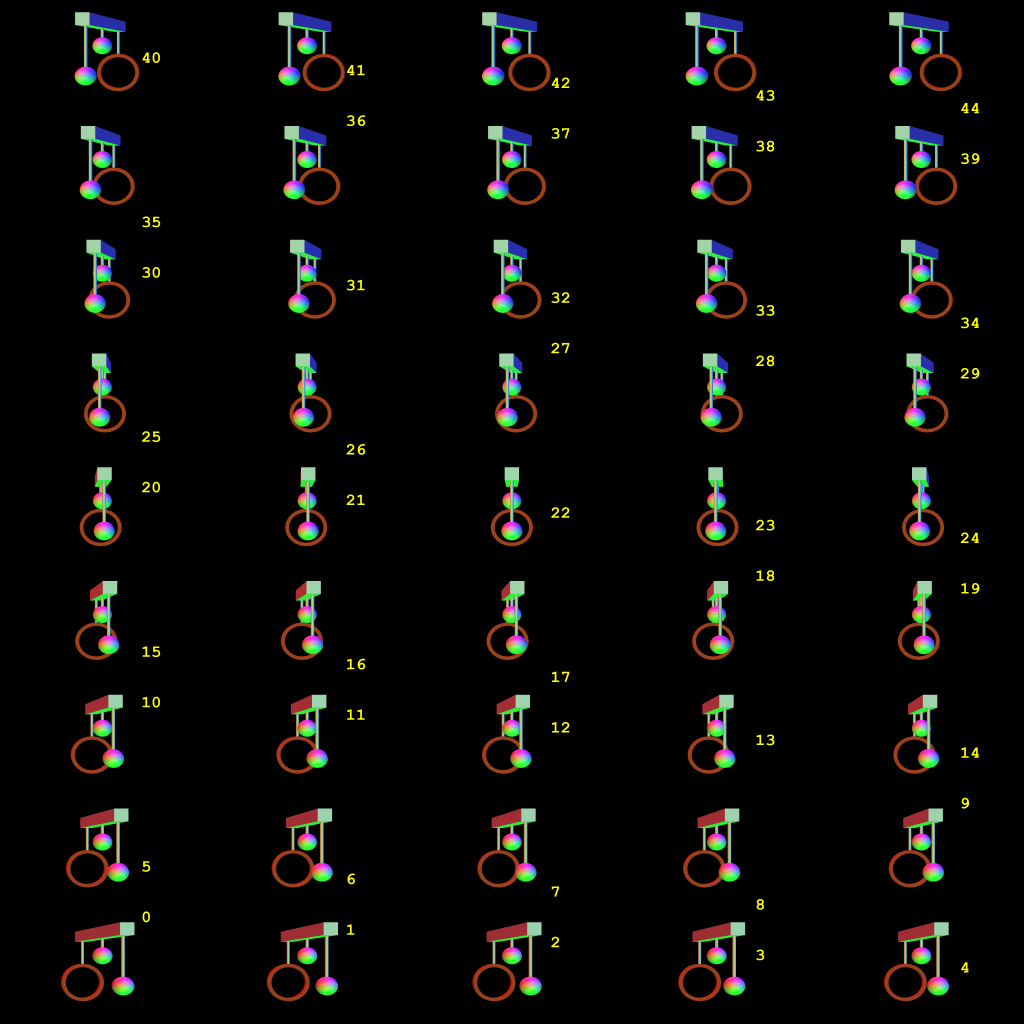

- Multi-viewability

The multi-viewability is constructed based on the quilt image – a class of 2D images of 3D volume from different views. Using the Looking Glass Documentation for illustration purposes, the following picture shows the full quilt image. The bottom left of the quilt is the left-most angle of the scene, and the top right is the right-most angle, and the Looking Glass device is capable of rendering the quilt image as a hologram shown in the next section. To achieve multi-viewability, quilt images have to be manually generated due to the need of dynamic control of transfer function parameters. I’ve written methods that automatically generate a quilt image and are chained after the dataset importer. 2D slices of the 3D texture created by the dataset are taken and stored locally for real-time rendering.

- Paradox with Blur

Applying paradox with blur adds more feelings of reality to the hologram by emphasizing the important image and blurring the others. As shown below, the Looking Glass will present multiple images simultaneously, and the one being emphasized has the most intensity in some sense. This allows the viewer to not discretely hop from one view to the next but rather crossfade amongst many. Paradox with blur effect can be automatically applied on top of the quilt image when rendered by the Looking Glass.

The Gesture Control

Gesture control is accomplished via analyzing a virtual hand model in Unity. The hand model game object is constructed from raw images streamed by the Leap Motion device via some straightforward computer vision techniques, and the approximated distances relative to each joint of the hand are packed into the Unity game object as well.

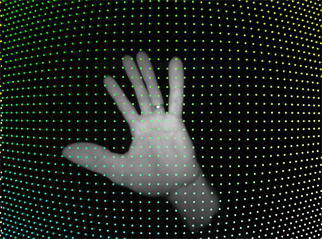

- Process Raw Images

The Leap Motion device is equipped with infrared stereo cameras as tracking sensors. Images from lenses are usually distorted due to the bent light rays generated once they pass through the lenses. The true angle of the light rays is corrected given the calibration map provided by the manufacturer. Given the extrinsic matrix and intrinsic matrix of the camera from the manufacturer, I was able to restore the distorted image and input into the model-fitting algorithm.

The Leap Motion device provides a 3D hand model as GameObject in Unity. With this handy, I use the restored images to map space information of the hands (joints, fingers, palms, etc.) into the hand model. Background subtraction and feature segmentations are run on both images from both cameras, and centers of the hand components are estimated. Knowing the focal length of the cameras, I was able to estimate the 3D space location of each hand components centers from 2D images, and these estimations will be mapped into the hand GameObject model.

- Analyzing the Hand Model

Once the virtual hand skeleton model is built, the interactivity with the displayed volume can be easily achieved with simple detector logic. For example, the bend angles of the fingers are calculated and used as the yaw offset of the volume rendered after some fine tuning to maximize user controllability.

Results

- Zoom

Moving your hands (released) away from/ towards each other will zoom in/zoom out the rendered electron density volume, respectively.

- Tilt

Tilting your hands up/ down will tilt the rendered electron density volume correspondingly.

- Rotate

Curling your left hand/ right hand will rotate the rendered electron density volume counterclockwisely/clockwisely, respectively.

- Change Isovalue

Once fixing your hands to set the mode to isovalue-sweeping mode, moving your hands away from/towards your hands will increase/decrease the isovalue of the rendered electron density volume, respectively. Releasing your hands will quit the isovalue-sweeping mode and thus fix the isovalue you just changed.

- Reset

Snapping with your right hand will reset the volume to its initial state.

Contribution and Appreciation

The Github page for this project is here.

The volumetric models are mostly derived from Matias Lavik‘s work. Appreciate it!

Special thanks to support from the NCSA and its SPIN program, and all the guidance and resources offered by my supervisor Prof. Schleife!